About a year ago, I bought a smart home water monitor in order to keep an eye

my water use at home. The city where I live provides a big rebate on one

particular device from San Luis Obispo company Flume. I was immediately curious

about how this device could work and was excited to open the box when it

arrived and inspect the contents.

There are two hardware components in this system. One component, the sensor, is

designed to be physically strapped to your water meter. The other component,

the bridge, receives information from the sensor and connects to the Internet

via Wi-Fi to deliver all this data to Flume. Flume provides an API for

customers to access their own data and there’s even a Home Assistant plugin

which should help bring all this information to the platform I run at home.

But I wanted to learn more about how this all works and was curious if there

could be a way to access this data more directly. As friendly as Flume seems to

be, I do feel that if I buy a device to track my own data, that data should

belong to me. But also, it would be nice to know that if Flume ever closes up

shop or shuts down its web service, that these devices could all still be

useful. So let’s take a closer look at the hardware to see what’s really

happening.

Continue reading »

In 2023, I managed to integrate my garage doors with HomeAssistant using a

Shelly Uni device.

Controlled remote operation is pretty great, but I wanted to document this

project because this solution covers remote door control, door state and even

door light control is possible, using a single $12 device with no batteries

required. The setup is easy to achieve, and leaves all the garage door opener

functionality in tact.

Overview

- Shelly Uni device is powered from a 12v DC power source leeched from the

low-voltage side of the garage door control circuit board

- Garage door switch is operated using one of the two potential free outputs on

the Shelly Uni

- Garage door state is monitored using both Shelly Uni inputs, detecting

open/closed circuits on the garage door opener’s own state sensors

- Light control is not implemented, but could be added to the extra Shelly

Uni output in an extremely simple circuit

Continue reading »

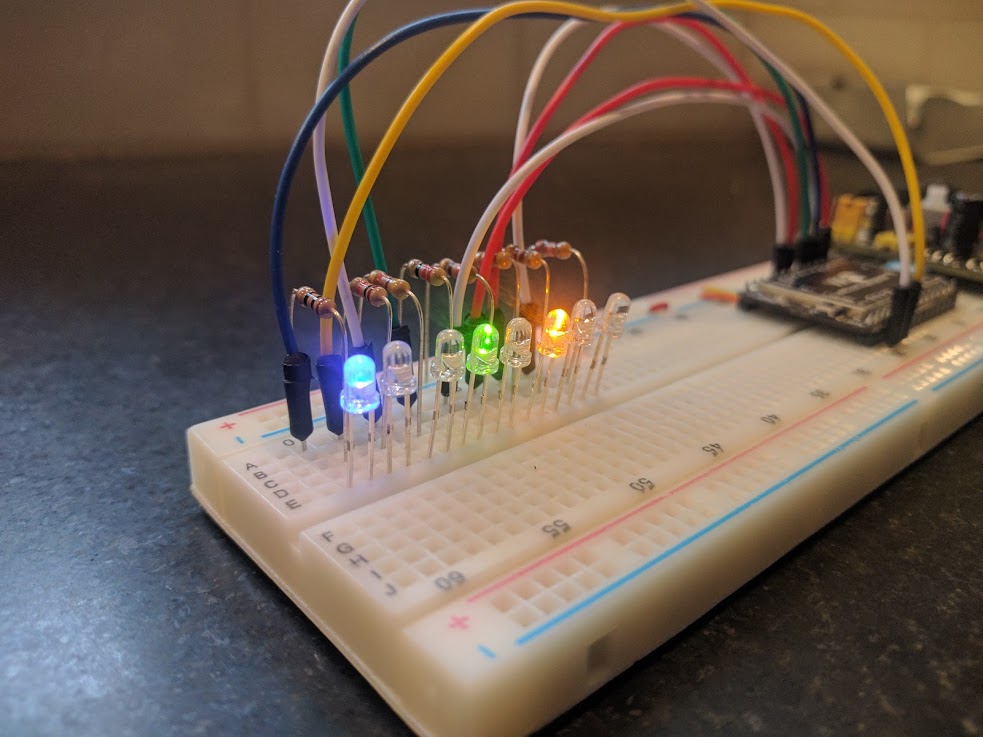

A binary clock is hardly a new idea, but this particular concept is

something I haven’t seen anywhere else. And it was idling in the back of

my mind for over a decade before finally seeing a working prototype.

Concept

The passage of time can be measured in any number of ways, but when a

clock is designed for humans, I think it should be based on a natural

concept. Since I live on Earth, I designed a clock, as many others have,

based on the smallest natural temporal concept I can readily observe:

the day.

The day is plenty useful for medium-term planning, but it lacks the

precision needed for many purposes, and so it must be divided in order

to build a useful clock. This is where clock design becomes much more

arbitrary. Dividing the day into 24 was supposedly based on astronomical

observations of various stars passing in the night, but beyond that,

there’s no natural reason there should be 60 minutes in an hour and so

on.

My thought, is that the simplest and most natural way to divide a day

would be in half. This is essentially the AM/PM indicator which is a

concept so natural and necessary, that even many of our 12 hour clocks

use it to avoid the otherwise ambiguous information displayed. But if

the most natural way to divide a day is in two, then maybe the next most

natural division is in two again. Dividing a day repeatedly in two

results in a kind of binary clock. And this is exactly the concept.

Continue reading »

Now that TLS is free, there’s very little

excuse to be running web services over plain HTTP. The easiest way to

add TLS to this blog was through AWS Certificate

Manager and its native

CloudFront Support. But for a while, there was a problem. In order to

use a free, trusted certificate from Amazon, I needed to be using

CloudFront. In order to be using CloudFront, I needed to be able to

resolve the name ‘lithostech.com’ to a CloudFront distribution. Since

DNS doesn’t support CNAME records on top level names, that meant

switching DNS service to Route53 where Amazon has a special solution for

this problem they call alias

records.

But there was a problem because Route53 doesn’t have DDNS support and I

use DDNS to reach my home network’s dynamic IP address when I’m out of

the house. And so I put this off for quite a while, mostly because I

didn’t realize how simple DDNS really was and how easily it could be

done with AWS Lambda.

Turning to the source code for

ddclient,

a popular DDNS client that ships with Debian, I found that DDNS amounts

to nothing more than calling a tiny web API to update a remote server

with your current IP address at regular intervals. Each vendor that

provides DDNS seems to implement it differently, and so it seems there

is no specific way to do this. But in all the implementations I saw, the

design was essentially a magic URL that anyone in the world can access

and use it to update the IP address of a DNS A record.

A picture was beginning to form on how this could be done with very low

cost on AWS:

- API Gateway (web accessible endpoint)

- Route53 (DNS host)

- Lambda (process the web request and update DNS)

- IAM Role (policy to allow the DNS changes)

On the client side, the only requirement is to be able to be able to

access the web with an HTTP(S) client. In my case, a CURL command in an

hourly cron job fit the bill. I enjoy the flexibility of being able to

implement and consume this as a tiny web service, but it could be made

simpler and more secure by having the client consume the AWS API to

invoke the lambda function directly rather than through the API Gateway.

I put some effort into making sure this Lambda function was as simple as

possible. Outside of aws-sdk, which is available by default in the

lambda node 4.3 execution environment, no other npm modules are

required. Source code and instructions are available on

GitHub.

Continue reading »

AWS Lambda is unique among PaaS offerings. Lambda takes all the utility grid

analogies we use to explain the cloud and embraces them to the extreme.

Lambda runs a function you define in a Node.js or Java 8 runtime, although you

can execute a subshell to run other kinds of processes. Amazon charges you by

memory use and execution time in increments of 128 MiB of memory and 100ms. The

upper limit for memory use is 1.5GiB and your Lambda function cannot take more

than 60 seconds to complete, although you can set lower limits for both.

There is a pretty generous free tier, but if you exceed the free tier, pricing

is still very friendly. For usage that does exceed the free tier, you’ll be

paying $0.00001667 per GiB*s and

$0.20 for every 1M invocations.

To bring that down to earth, let’s say you write a lambda function that takes

on average 500ms to run and uses 256MiB of memory. You could handle 3.2M

requests before exausting the free compute tier, but you would pay $0.40 to

handle the 2.2M requests beyond the 1M request free tier. Another 3.2M requests

would cost another $6.67 including both compute time and request count charges.

Since my company’s new static web page

brandedcrate.com needed a contact form handler,

I took the opportunity to learn about how Lambda can provide cheap, dynamic

service for a static site.

In the example below, I’ll show you what I came up with. The idea is that I

would present a simple, static web form to my users and submitting a form would

activate some client-side JavaScript to validate and submit the contents to a

remote endpoint. The endpoint would connect to the AWS API Gateway

service and trigger a lambda function.

The lambda function would perform any required server-side validation and then

use the AWS SDK for Node.js to send an email using AWS Simple Email

Service. Just like any other API endpoint, the

Lambda function can return information about the result of its own execution in

an HTTP response back to the client:

var AWS = require('aws-sdk');

var ses = new AWS.SES({apiVersion: '2010-12-01'});

function validateEmail(email) {

var tester = /^[-!#$%&'*+\/0-9=?A-Z^_a-z{|}~](\.?[-!#$%&'*+/0-9=?A-Z^_a-z`{|}~])*@[a-zA-Z0-9](-?\.?[a-zA-Z0-9])*(\.[a-zA-Z](-?[a-zA-Z0-9])*)+$/;

if (!email) return false;

if(email.length>254) return false;

var valid = tester.test(email);

if(!valid) return false;

// Further checking of some things regex can't handle

var parts = email.split("@");

if(parts[0].length>64) return false;

var domainParts = parts[1].split(".");

if(domainParts.some(function(part) { return part.length>63; })) return false;

return true;

}

exports.handler = function(event, context) {

console.log('Received event:', JSON.stringify(event, null, 2));

if (!event.email) { context.fail('Must provide email'); return; }

if (!event.message || event.message === '') { context.fail('Must provide message'); return; }

var email = unescape(event.email);

if (!validateEmail(email)) { context.fail('Must provide valid from email'); return; }

var messageParts = [];

var replyTo = event.name + " <" + email + ">";

if (event.phone) messageParts.push("Phone: " + event.phone);

if (event.website) messageParts.push("Website: " + event.website);

messageParts.push("Message: " + event.message);

var subject = event.message.replace(/\s+/g, " ").split(" ").slice(0,10).join(" ");

var params = {

Destination: { ToAddresses: [ 'Branded Crate <hello@brandedcrate.com>' ] },

Message: {

Body: { Text: { Data: messageParts.join("\r\n"), Charset: 'UTF-8' } },

Subject: { Data: subject, Charset: 'UTF-8' }

},

Source: "Contact Form <hello@brandedcrate.com>",

ReplyToAddresses: [ replyTo ]

};

ses.sendEmail(params, function(err, data) {

if (err) {

console.log(err, err.stack);

context.fail(err);

} else {

console.log(data);

context.succeed('Thanks for dropping us a line');

}

});

};

Not bad, right? I’ve just added an element of dynamism to my static web site.

It’s highly available, costs nothing, there’s no servers manage and there’s no

processes to monitor. AWS provides some basic monitoring and any script output

is available in CloudWatch for

inspection. Now that basically all browsers support CORS, your users can make

cross-origin requests from anywhere on the web. Setting this up in

AWS

is a bit ugly, but I’m willing to make the effort to get all the benefits that

come along with it.

I’m excited about the possibilities of doing much more with Lambda, especially

the work Austen Collins is doing with his

new Lambda-based web framework, JAWS.

The hardest part about this whole thing was properly setting up the API

Gateway. I tried in vain to get the API Gateway to accept url-encoded form

parameters, but that was a losing battle. Just stick with JSON.

Continue reading »